Recently, the research team from our college, Crop Disease and Insect Pest Monitoring and Management Team, just published a paper titled "Image detection model construction of Apolygus lucorum and Empoasca spp. based on improved YOLOv5" in the journal Pest Management Science. This study represents the latest research progress in the application of Deep Learning Models (DLM) for automatic extraction of target features in pest monitoring. Master student Xiong Bo is the first author of the paper, while Associate Prof. Luo Chen and Associate Prof. Hu Zuqing are the corresponding authors.

The construction of an automated, intelligent, and precise pest monitoring and early warning system is the prerequisite and foundation for efficient pest control, and it also constitutes a crucial component of “smart” agriculture. Automated pest recognition and counting are essential tools for pest monitoring. Currently, infrared sensors and machine learning-based image recognition and counting techniques, which rely on manually extracted pest features, are the main methods. However, these techniques suffer from issues such as low accuracy, poor universality, and difficulties in extracting pest features.

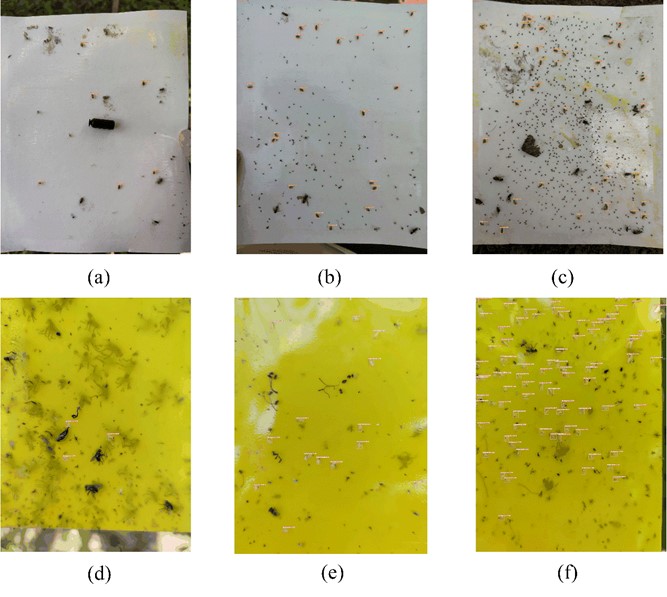

In this study, the team focused on Apolygus lucorum (Meyer-Dür), an important pest of kiwifruit, and Empoasca spp., small green leafhoppers. The team collected 1502 images of Apolygus lucorum and Empoasca spp. using sticky traps coated with sex pheromone, both white and yellow, in multiple kiwifruit orchards during different periods. These images were divided into training, validation, and test sets in a 7:2:1 ratio. They trained the model using the fast single-stage YOLOv5s (you-only-look-once) from DLM, and to address the issue of low accuracy in recognizing small insects with single-stage models. They made improvements to the YOLOv5s model. These included replacing the activation function with Hard swish, adding the Siou Loss function, and incorporating the SE (squeeze-and-excitation) attention mechanism. Through ablation experiments, they developed a new YOLOv5s_HSSE model. This model achieved a high mean Average Precision (mAP) of 95.9% and a Recall rate of 93.3% on the test dataset, with a detection speed of 155 frames per second, surpassing other single-stage deep learning models such as SSD, YOLOv3, and YOLOv4. This study also tested the performance of YOLOv5s_HSSE on images of pests at high, medium, and low densities. The researchers found that the mAP and Recall rates were above 92.7% and 91.8%, respectively, across all three densities, with the best performance observed at medium density, achieving mAP and Recall rates of 96.3% and 94.0%, respectively. This study lays a solid foundation for precise pest monitoring.

An example of detection results of pests under different densities using the YOLOv5_HSSE detection model

(a) Low-density A. lucorum; (b) Medium-density A. lucorum; (c) High-density A. lucorum; (d) Low-density Empoasca spp.; (e) Medium-density Empoasca spp.; (f) High-density Empoasca spp.

So far, the team is currently applying this technology to other crops (wheat, tobacco, cruciferous vegetables, apples, etc.) for automatic recognition and counting of important target pests. They have initially developed a target pest automatic monitoring and early warning system. This system relies on the color preference and communication behavior of target pests. By installing Internet of Things (IoT) monitors that integrate automatic sticky trap rolling, automatic high-resolution photography, and automatic uploading of photos to a cloud database in the field, the system achieves fully automatic and real-time collection and uploading of target pest monitoring data. Furthermore, using deep learning models, the system precisely identifies and accurately counts the target pests in the photos, enabling fully automatic and real-time analysis of the monitoring data. Finally, by combining local meteorological data with machine learning and biological models, the system provides real-time early warnings for target pests. Users can receive real-time monitoring data and warning information for target pests on websites or mobile applications. This system provides reliable decision support for the precise control of crop target pests.

This study was funded by projects such as the National Key Research and Development Program (2022YFD1400403), and others.

Original Link: https://onlinelibrary.wiley.com/doi/full/10.1002/ps.7964